A hobbyist developer building AI language models that speak Victorian-era English "just for fun" got an unexpected history lesson this week when his latest creation mentioned real protests from 1834 London—events the developer didn't know had actually happened until he Googled them.

"I was interested to see if a protest had actually occurred in 1834 London and it really did happen," wrote Reddit user Hayk Grigorian, who is a computer science student at Muhlenberg College in Pennsylvania.

For the past month, Grigorian has been developing what he calls TimeCapsuleLLM, a small AI language model (like a pint-sized distant cousin to ChatGPT) which has been trained entirely on texts from 1800–1875 London. Grigorian wants to capture an authentic Victorian voice in the AI model's outputs. As a result, the AI model ends up spitting out text that's heavy with biblical references and period-appropriate rhetorical excess.

Grigorian's project joins a growing field of researchers exploring what some call "Historical Large Language Models" (HLLMs) if they feature a larger base model than the small one Grigorian is using. Similar projects include MonadGPT, which was trained on 11,000 texts from 1400 to 1700 CE that can discuss topics using 17th-century knowledge frameworks, and XunziALLM, which generates classical Chinese poetry following ancient formal rules. These models offer researchers a chance to interact with the linguistic patterns and thought processes of past eras.

According to Grigorian, TimeCapsuleLLM's most intriguing recent output emerged from a simple test. When he prompted it with "It was the year of our Lord 1834," the AI model—which is trained to continue text from wherever a user leaves off—generated the following:

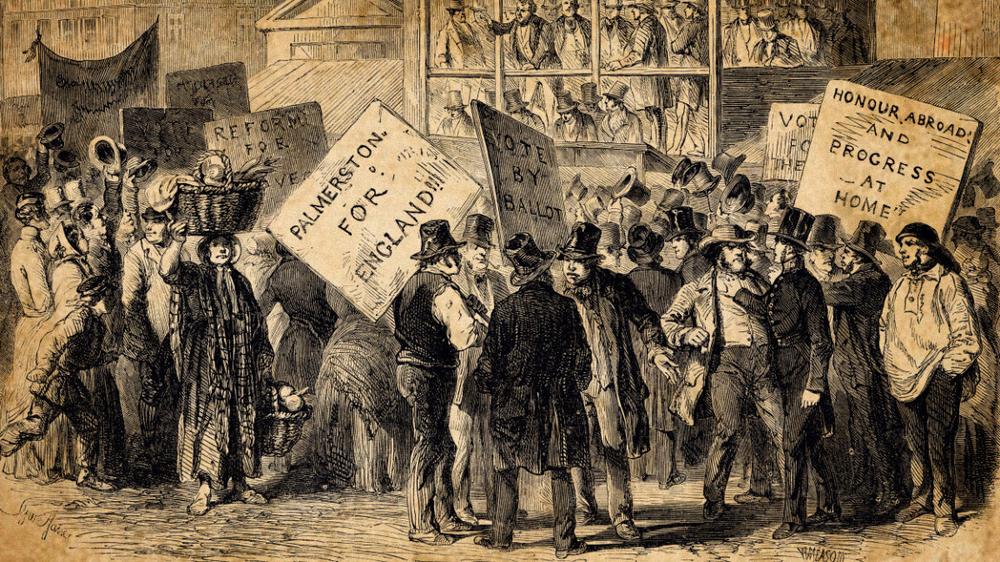

It was the year of our Lord 1834 and the streets of London were filled with protest and petition. The cause, as many re counted, was not bound in the way of private, but having taken up the same day in the day of Lord Palmerston, the public will receive a short statement of the difficulties under which the day of law has reached us. It is a matter of deep regret, that the present events in the history of the world are clear, and consequently will be'known. It is not true that the very men who first settled in the Gospel at Jerusalem should have so extensive and so interesting a record of the prosperity and prosperity

Curious about the accuracy, Grigorian did some fact-checking. "The output also brought up Lord Palmerston," he wrote, "and after a google search I learned that his actions resulted in the 1834 protests."

The details mentioned in the AI-generated text do appear to align with historical fact: 1834 saw significant civil unrest in England following the Poor Law Amendment Act 1834, and Palmerston served as Foreign Secretary for Great Britain during this turbulent period, later becoming prime minister.

On the one hand, this output is not very surprising. AI researchers who create AI language models like the kind that power ChatGPT have known for years that these models can synthesize realistic permutations of information learned from those texts. It's how every AI assistant today works.

But what makes this episode especially interesting is that a small hobbyist model trained by one man appears to have surprised him by reconstructing a coherent historical moment from scattered references across thousands of documents, connecting a specific year to actual events and figures without being explicitly taught these relationships. Grigorian hadn't intentionally trained the model on 1834 protest documentation; the AI assembled these connections from the ambient patterns in 6.25GB of Victorian-era writing.

"This is all from just 5-6GB of data," Grigorian wrote on Reddit. "Imagine the results with 30GB or more. I’m not sure if just scaling the data up will ever result in reasoning but even now it kinda feels like digital time travel."

Linguistic time travel through statistics

Rather than fine-tuning AI language models on modern text sources, developer Hayk Grigorian trains his hobbyist AI models from scratch using exclusively Victorian-era sources—over 7,000 books, legal documents, and newspapers published in London between 1800 and 1875. He calls the process "Selective Temporal Training" or STT. A custom tokenizer that chops words into simplified representations for easier processing excludes modern vocabulary entirely.

"If I fine-tune something like GPT-2, it's already pre-trained and that information won't go away," Grigorian explained on GitHub about modern data contamination. "If I train from scratch the language model won't pretend to be old, it just will be."

Using architectures from the "small language models" nanoGPT and Microsoft's Phi 1.5, Grigorian has trained three AI models so far, each showing improved historical coherence. Version 0, trained on just 187MB, produced Victorian-flavored gibberish. Version 0.5 achieved grammatically correct period prose but hallucinated facts. The current 700-million parameter version, trained on a rented A100 GPU, has begun generating historical references like the one seen above.

Grigorian is finding a reduction in confabulations over time as the emergent effect of scaling the size of high-quality training data, a well-known effect among AI researchers, especially when the models are very small. "Earlier models (v0 and v0.5) could mimic writing styles of the 19th century but would always hallucinate events, people, and facts," Grigorian wrote on Github. "This shows the model is beginning to remember things from the dataset."

For historians and digital humanities researchers, these kinds of experiments may be useful. Training AI language models on period texts may allow for the creation of interactive period linguistic models that offer a researcher a chance to converse with a simulated speaker of an extinct vernacular or language of the past. The results would not necessarily be factually rigorous due to confabulations, but they could be stylistically illuminating for someone studying antique syntax or vocabulary in use.

"I want to eventually try different cities also, maybe a Chinese, Russian, or Indian city model," Grigorian wrote, inviting collaboration with others on future AI models he might train. He makes the code, AI model weights, and documentation of his work publicly available on GitHub.

In an era of frequent AI confabulations, there's something refreshing about a model that accidentally tells the truth about the past. It's almost the opposite of hallucination—an AI model accidentally getting something correct. Call it a "factcident."

“That’s my emotional support lol”: Millennials are crashing out after Gen Z points out they end every text with “lol”

“That’s my emotional support lol”: Millennials are crashing out after Gen Z points out they end every text with “lol”