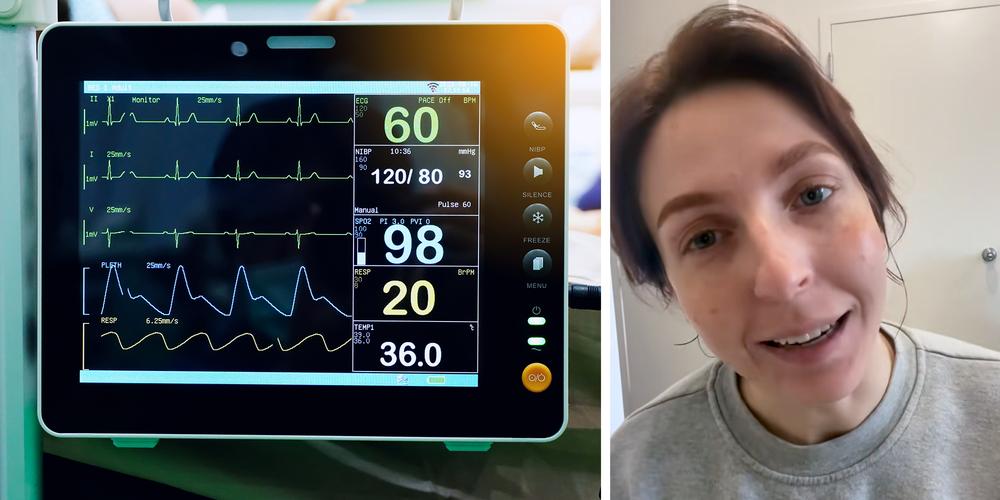

A woman went viral on TikTok after claiming her doctor used artificial intelligence to read her EKG—and the AI wrongly decided she’d had a heart attack.

Featured VideoMeg Bitchell (@megitchell) said she went in for a routine check-up before losing her current insurance. But she said she left with a shocking diagnosis.

According to her, the AI that read her EKG flagged her as someone who had suffered a heart attack and was “in really bad health.” Her doctor referred her to a cardiologist for more tests.

“I passively, for one month, thought I was going to die,” Bitchell said.

AdvertisementWhen she finally saw the cardiologist, she was told she was “fine.” Bitchell said the specialist explained that her primary care doctor had signed off on the AI’s reading without even looking at her chart.

“They have AI that reads the EKGs now,” Bitchell said, clearly frustrated.

@megitchellI HATE AI I HATE AI!!!!!!! Not my normal type of video just needed to complain

♬ original sound – meg bitchell

“I HATE AI, I HATE AI,” she wrote in the caption of her clip. As of Friday, the video had pulled in more than 44,800 views.

AdvertisementThe problem with letting AI call the shots

Bitchell’s story isn’t unique. AI reading EKGs can produce incorrect results for various reasons, including poor data quality, inadequate signal quality, or limitations inherent to the algorithm’s training. These systems are supposed to help doctors, not replace them, but that only works if the human double-checks the result.

One big problem is bias. If an AI is trained primarily on data from one demographic group—such as white men—it may misread EKGs from individuals who don’t fit that profile. Models can also struggle when the data they encounter in the real world doesn’t resemble the data they were trained on. And unlike tools explicitly built for cardiology, general-purpose AIs like ChatGPT aren’t trained on enough annotated EKGs to make reliable diagnoses.

AdvertisementEven when the tech is solid, other factors can trip it up. A patient moving, an electrode being slightly out of place, or electrical interference from another device can create noise that mimics a heart problem. AI can mistakenly identify those artifacts as something serious. And since many models work like a “black box,” even doctors can’t always see how the AI got to its conclusion.

The bigger risk may be human overconfidence. If a physician signs off on whatever the computer says without reviewing the chart, mistakes slip through—just like what Bitchell says happened to her. That’s why experts recommend using AI as a second set of eyes, with a cardiologist reviewing the results before a patient is sent for further tests.

Viewers question why doctors would use AI

Commenters were shocked that a health care provider would rely on AI to read a patient’s charts. Many wondered if that was even legal.

Advertisement“This has to be medical malpractice,” one user wrote. “If it’s not, it’s only because the laws haven’t caught up with technology.”

“We should be able to opt out of having our medical info fed into AI,” another said.

“Algorithms are notoriously bad at reading EKGs,” added a third commenter. “That’s why we still train humans on reading them.”

Others pointed out that AI is already being widely used in healthcare, which many consider a terrifying prospect.

Advertisement“I had one use AI to read the questionnaire on my autism screening,” one woman shared. “It said I was an alcoholic, even though I only have like one drink every three months.”

“I just had an older doctor tell me … that he uses ChatGPT to help him with treatment plans,” another said. “Countless businesses are popping up and telling doctors that it’s OK to use their AI tools in diagnosis now.”

Some encouraged Bitchell to take legal action over what happened.

“Sue the doctor for malpractice,” one person advised.

Advertisement“I am a clinician and this is HIGHLY PROBLEMATIC,” another wrote. “You need to sue them.”

“Malpractice suit, anyone?” a third viewer asked.

The Daily Dot has reached out to Bitchell for more information via TikTok.

Advertisement

Ukrainian HIMARS reportedly strike thermal power plant in Russia's Belgorod Oblast

Ukrainian HIMARS reportedly strike thermal power plant in Russia's Belgorod Oblast