Terminator writer-director James Cameron says that the spread of AI means he’s now fearful of the world ending as he predicted.

Thanks to Titanic, Avatar, and Avatar: The Way of Water, James Cameron is responsible for three of the four most successful movies of all-time.

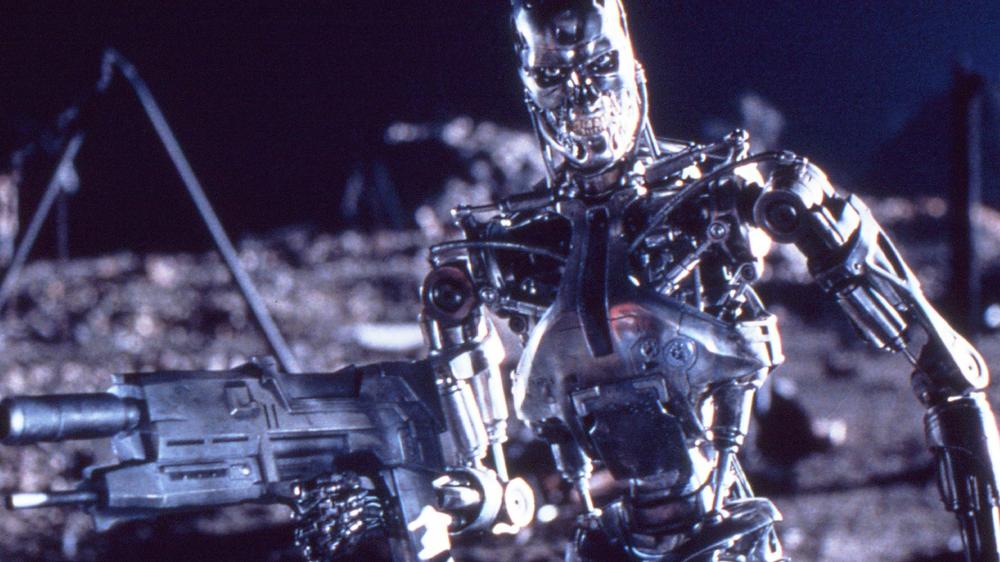

But before he was sinking ships or journeying to Pandora, the writer-director crafted Terminator and Terminator 2, acclaimed sci-fi movies about an artificially intelligent defense system becoming self-aware, and starting a nuclear war to wipe out humanity.

Man fights back in both movies, and manages to avert ‘Judgement Day,’ at least temporarily. But in real-life, Cameron isn’t so sure about our chances.

James Cameron fears AI + weapons systems = apocalypse

James Cameron has plans to adapt new novel Ghosts of Hiroshima into a movie about the horrors of the atomic bomb. Meaning the apocalypse is on his mind.

In a wide-ranging interview with Rolling Stone about the literal and metaphorical fallout from the bomb, Cameron was asked about adding AI to the equation.

“I do think there’s still a danger of a Terminator-style apocalypse where you put AI together with weapons systems, even up to the level of nuclear weapon systems, nuclear defense counterstrike, all that stuff,” said the writer-director.

“Because the theater of operations is so rapid, the decision windows are so fast, it would take a superintelligence to be able to process it, and maybe we’ll be smart and keep a human in the loop.

“But humans are fallible, and there have been a lot of mistakes made that have put us right on the brink of international incidents that could have led to nuclear war.”

Could superintelligence be the answer?

Even though Cameron sees AI as a major problem for mankind, he also believes it could provide us with solutions.

“I feel like we’re at this cusp in human development where you’ve got the three existential threats: climate and our overall degradation of the natural world, nuclear weapons, and superintelligence,” he continued.

“They’re all sort of manifesting and peaking at the same time. Maybe the superintelligence is the answer. I don’t know. I’m not predicting that, but it might be.

“I could imagine an AI saying, ‘guess what’s the best technology on the planet? DNA, and nature does it better than I could do it for 1,000 years from now, and so we’re going to focus on getting nature back where it used to be.’ I could imagine, AI could write that story compellingly.”

UEFA pays $13 million in 'solidarity' money to Russian football clubs while Ukraine fails to receive funds, Guardian reports

UEFA pays $13 million in 'solidarity' money to Russian football clubs while Ukraine fails to receive funds, Guardian reports