Hundreds of thousands of conversations that users had with Elon Musk’s xAI chatbot Grok are easily accessible through Google Search, reports Forbes.

Whenever a Grok user clicks the “share” button on a conversation with the chatbot, it creates a unique URL that the user can use to share the conversation via email, text or on social media. According to Forbes, those URLs are being indexed by search engines like Google, Bing, and DuckDuckGo, which in turn lets anyone look up those conversations on the web.

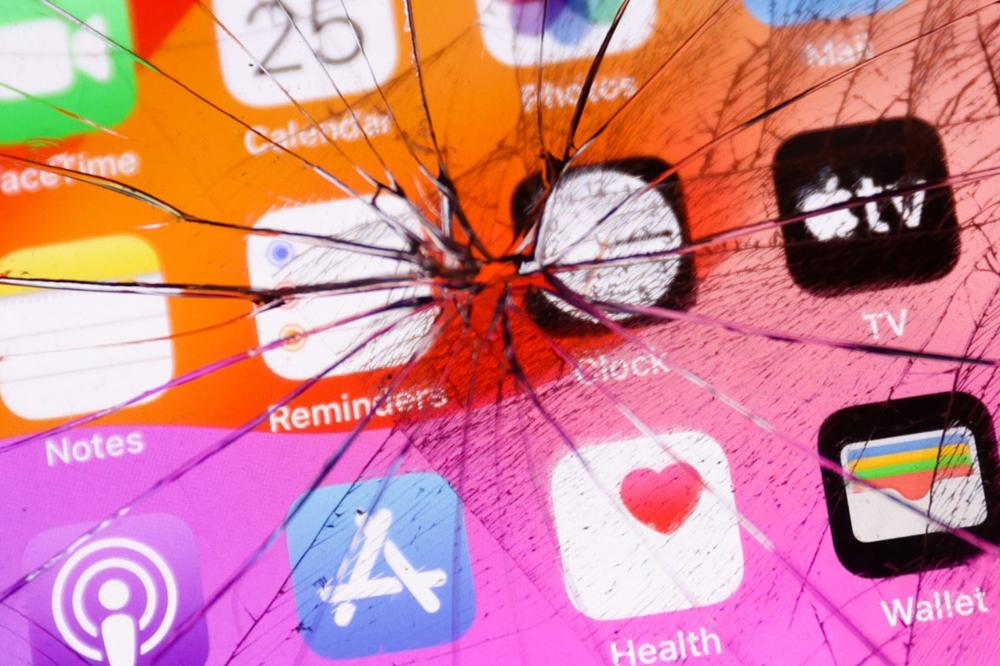

Users of Meta and OpenAI‘s chatbots were recently affected by a similar problem, and like those cases, the chats leaked by Grok give us a glimpse into users’ less-than-respectable desires – questions about how to hack crypto wallets; dirty chats with an explicit AI persona; and asking for instructions on cooking meth.

xAI’s rules prohibit the use of its bot to “promote critically harming human life” or developing “bioweapons, chemical weapons, or weapons of mass destruction,” though that obviously hasn’t stopped users from asking Grok for help with such things anyway.

According to conversations made accessible by Google, Grok gave users instructions on making fentanyl, listed various suicide methods, handed out bomb construction tips, and even a detailed plan for the assassination of Elon Musk.

xAI did not immediately respond to a request for comment. We’ve also asked when xAI began indexing Grok conversations.

New zero-day startup offers $20 million for tools that can hack any smartphone

New zero-day startup offers $20 million for tools that can hack any smartphone